Migrating to Airflow from Cron

This blog consists of my overview of Apache Airflow for the first time using it.

Reason behind migration 🤔

At Vymo, we have a high reliance on cron jobs to automate our day-to-day tasks, and the dependency is increasing exponentially, leading to the following situations:

If a critical job failed to run a day before, we are in a situation to figure out why it didn't run and what can be the reason behind the failure as the execution of the job is not transparent?

The job execution time increased due to the hundreds of jobs running on the server.

To know the reason behind the above, we keep the logs of the job's output on the server where the jobs were run. However, parsing logs for the information is expensive for the developers to navigate, as the logs are not centralized.

- The re-triggering of the failed job also requires a schedule update of that job and deployment of the crontab.

So cron is eventually reaching its limit, and we require:

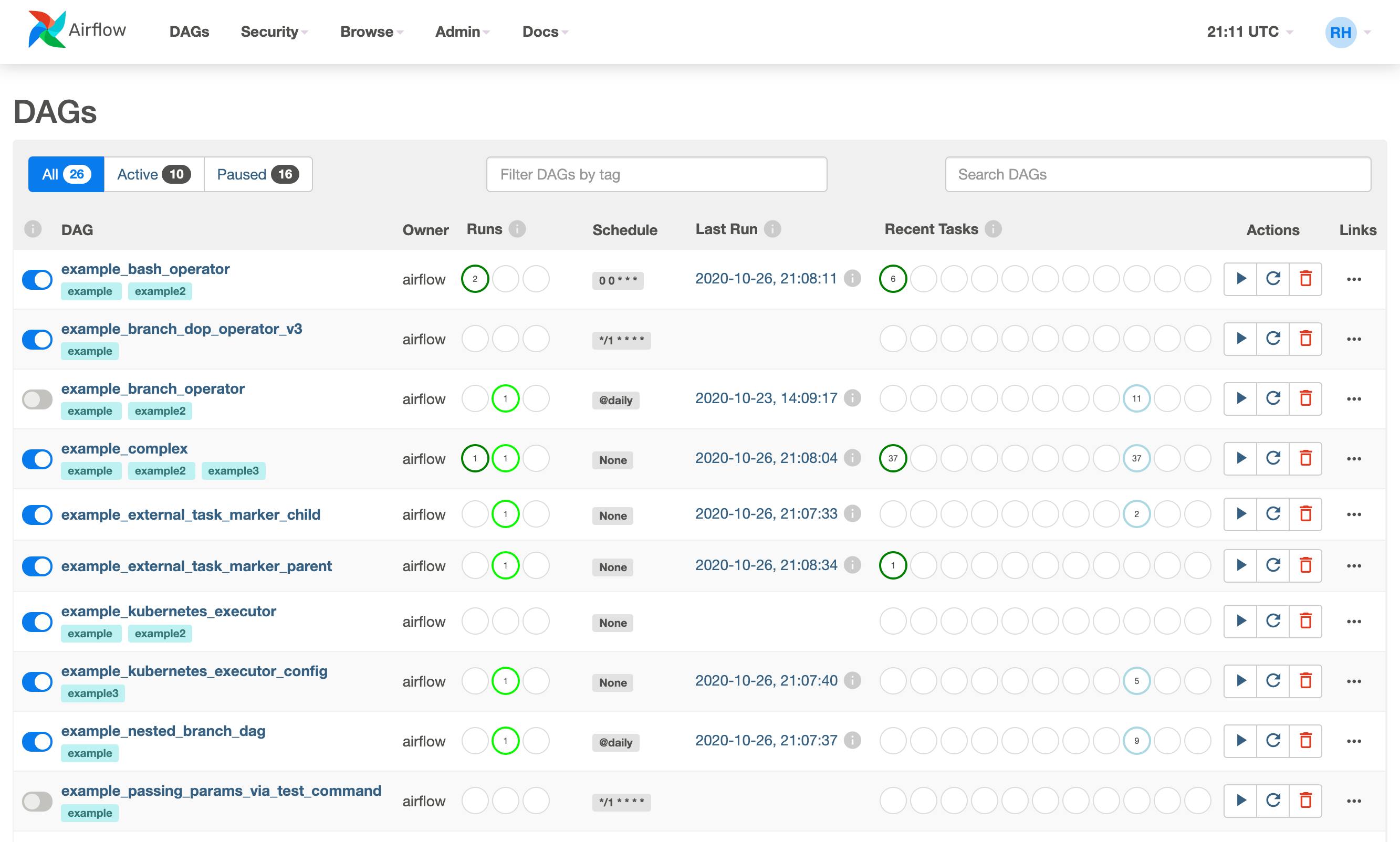

- A way to handle the jobs in a centralized place.

- A well-defined UI for the transparency of the job's performance.

- Error alerting and reporting.

- Jobs run-time analysis.

Here comes Apache Airflow 🚀

So to fulfill these requirements, we discovered an open-source tool, Apache Airflow. It is a tool to configure, schedule, and monitor jobs. And it's DAG workflow offered a simple way to build, schedule, test, and scale complex jobs.

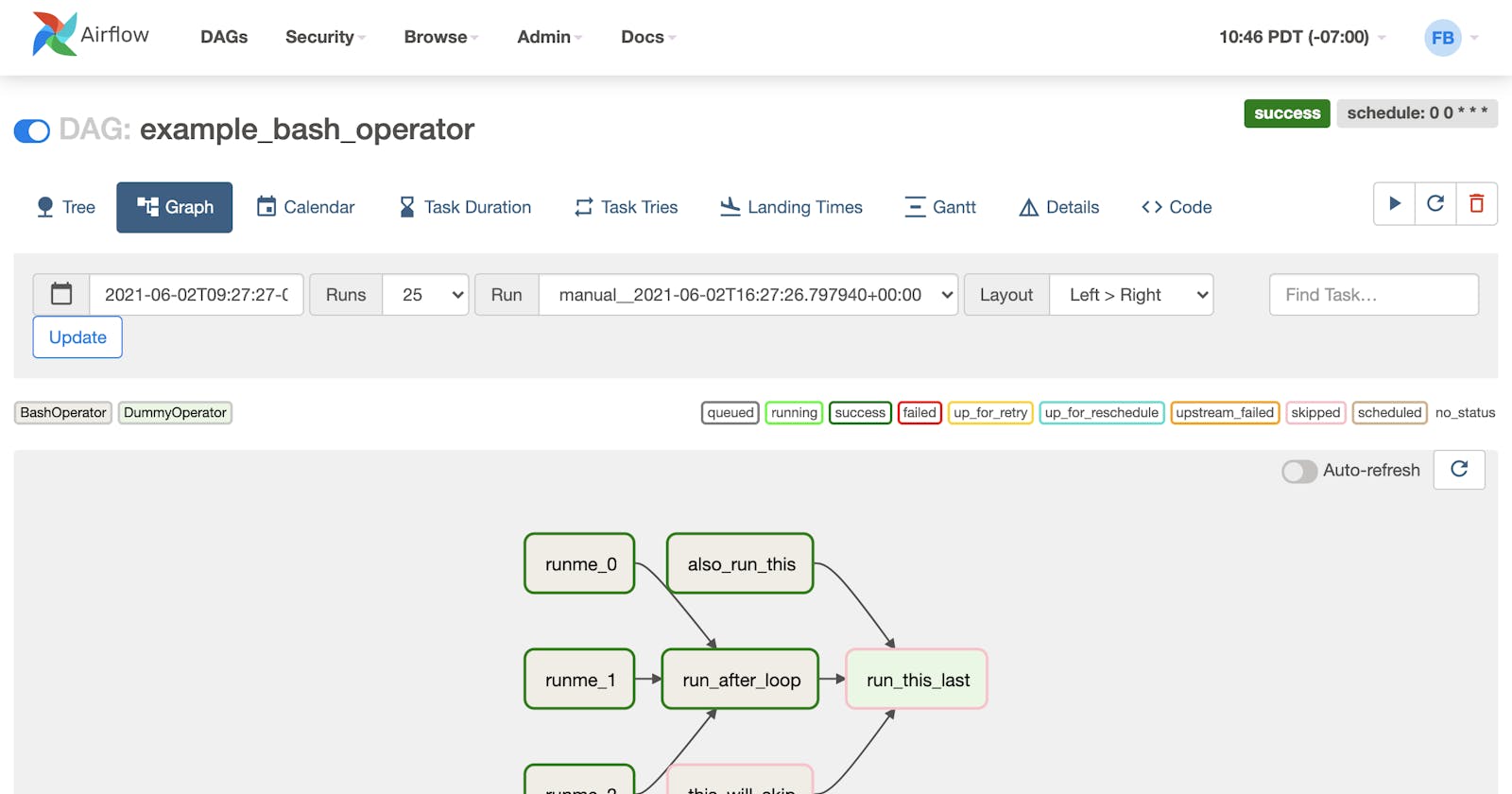

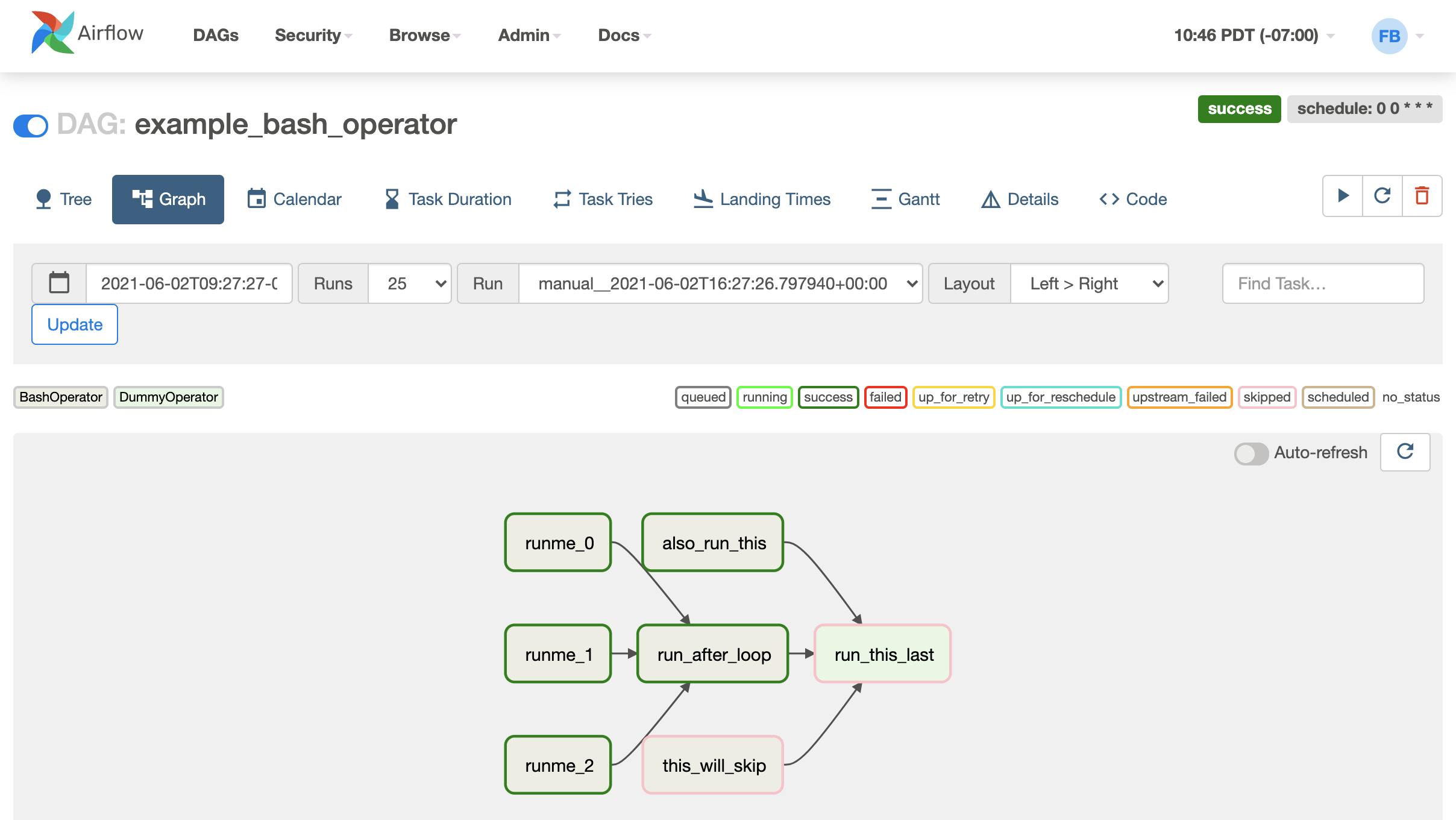

DAGs

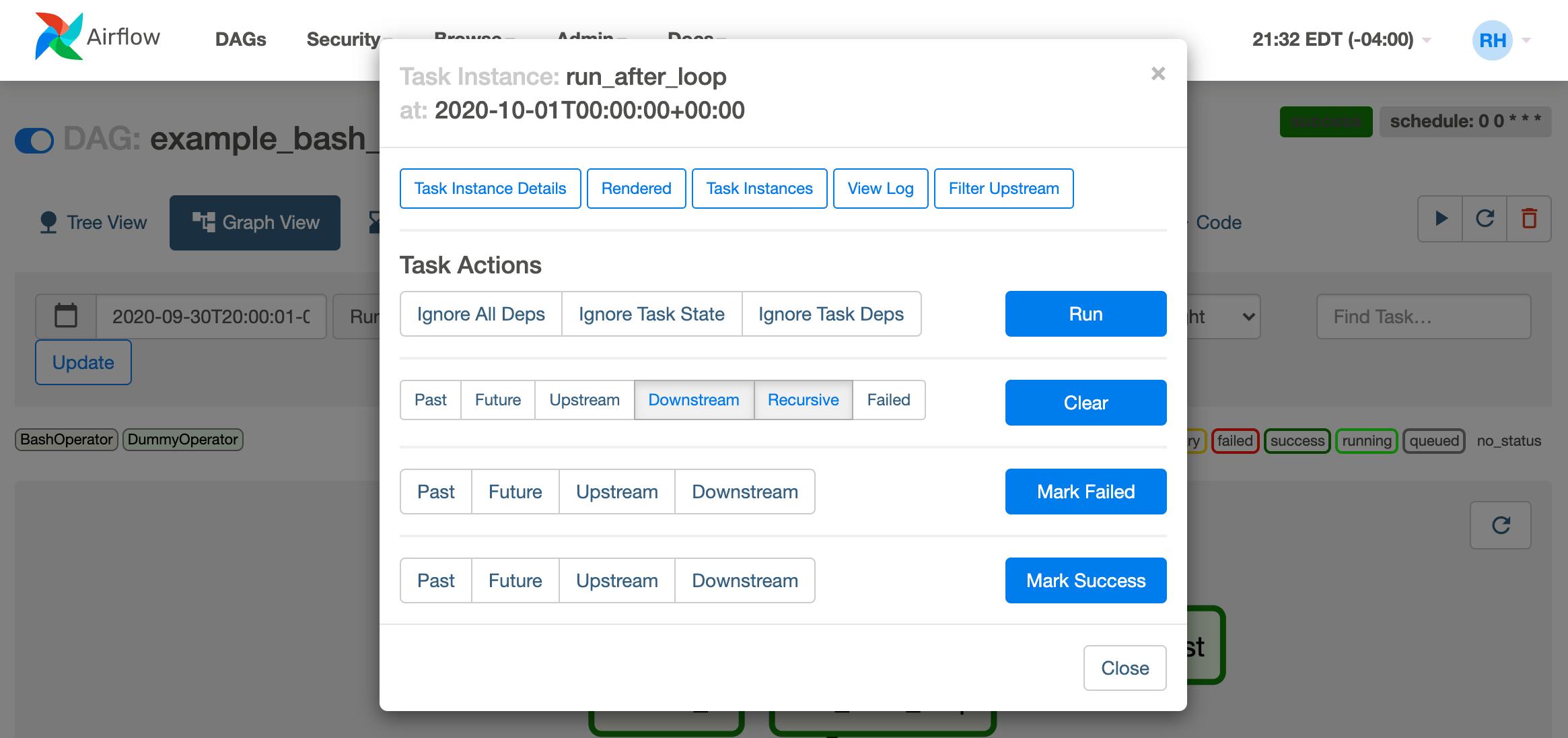

Directed Acyclic Graph (DAG) is a workflow defined in Python that provides a sequential relation in your set of jobs. It can be used to manually re-trigger or restart a failed job. A DAG has operators to create tasks inside it.

Operators

Operators have the tools to perform a specific task in a job. You can use common operators(like BashOperator to execute shell scripts or PythonOperator to run python code) or you can create your own custom operator.

Here's a code snippet of EmailOperator to send an email from Airflow Docs:

with DAG("my-dag") as dag:

ping = SimpleHttpOperator(endpoint="http://example.com/update/")

email = EmailOperator(to="admin@example.com", subject="Update complete")

ping >> email

Easy UI to monitor logs

For each task run, Airflow provides access to the logs with ease making monitoring transparent.

Alerting

Airflow, on default, provides email alerts for job failures. However, you can set up Slack alerts using the Slack operator.

How-to Guide for Slack Operators

Job Analysis

Airflow provides a monitoring interface, to have a quick overview of the unique jobs, and utilize features to re-trigger or clear DAGs runs.

With such features, we have set up Slack alerts for error reporting and can analyze the DAGs and monitor the logs with ease in a centralized place.

So this is my overview of Airflow by the time of writing this blog, and I highly recommend it. I still have to learn more about Airflow, but I hope after reading about it till now, you might be interested in it. You can learn more about Airflow from here.

Feel free to reach out to me if you have any queries. Thank you!